Have you ever wondered how machines recognize and understand human movement when so many variables might influence it? The answer to this question lies in human pose estimation.

This article aims to uncover how human pose estimation (HPE) technology can be a game-changer in the medical rehabilitation sector through its ability to identify and predict human body location and orientation.

Human pose estimation (HPE) is a computer vision-based technology that identifies and classifies specific points on the human body. These points represent our limbs and joints to calculate flexion angle and estimate human pose or posture.

The foundation of the work of physiotherapists, fitness instructors, and artists is understanding the proper joint angle during a particular exercise.

What if a machine can understand such things as an experienced physiotherapist or fitness instructor? The results it could deliver would be surprising!

Most HPE techniques work by taking an RGB image with the optical sensor and using that image to identify body parts and the overall pose. The core function of this technology is to detect points of interest in the human body. We can even create a human body model in 2D or 3D using these key details.

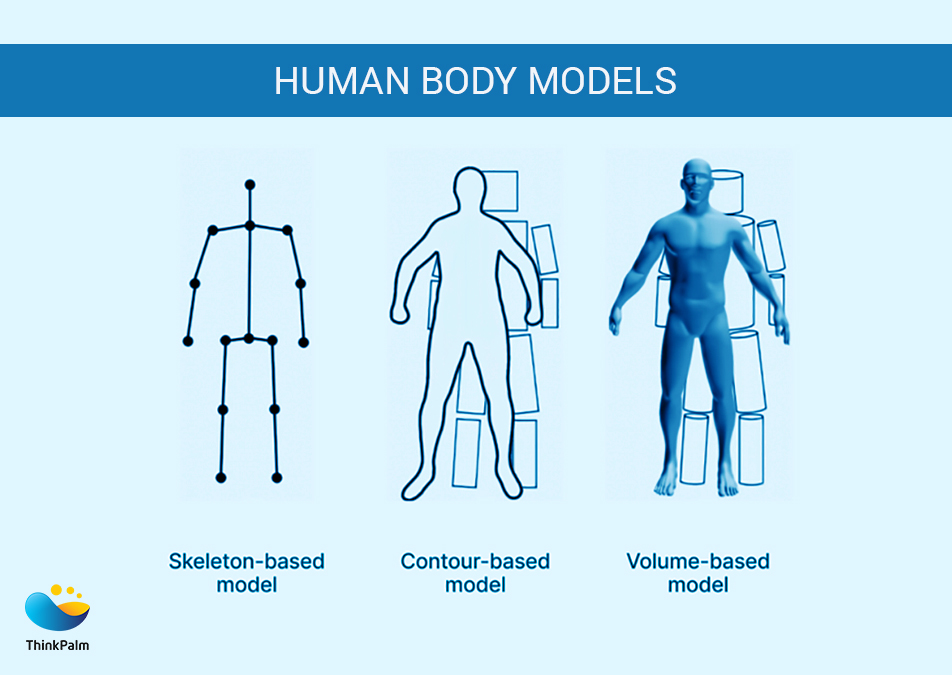

There are three major human model types:

This representation, also known as the kinematic model, consists of a set of key points (joints), such as ankles, knees, shoulders, elbows, and wrists, that are primarily used for 2D or 3D pose estimation. The relationships between various anatomical parts are frequently represented using this model.

Active shape modeling (ASM) uses principal component analysis (PCA) to capture the human body’s deformations. This method, known as the planar model, calculates 2D pose. The result is a generalized outline and measurements for the body, torso, and limbs.

A volumetric model provides useful data for estimating 3D poses. The dataset contains multiple 3D human body models and poses represented by meshes and shapes.

Pose estimation tracks the location of an object or person by using its pose and orientation. It allows the software to estimate a body’s spatial positions or poses in a still image or video.

There are two types of pose estimation: multiple pose and single pose. Single-pose estimation is used to estimate the poses of a single object in a given scene, while multi-pose estimation is used when detecting poses for multiple objects.

The issue of estimating human poses has been addressed using various strategies. However, existing techniques can be broadly divided into three groups:

New difficulties arose as HPE’s research and development program began to grow.

The multi-person pose estimation was one of them.

DNNs are excellent at estimating a single human pose, but they struggle to estimate multiple poses for the following reasons.

The researchers presented two strategies to address these issues.

Let’s now quickly review the deep learning models that are applied to multi-human pose estimation.

CNN is the primary architecture of OpenPose. To extract patterns and representations from the input, it uses a VGG-19 convolutional neural network. The network first recognizes the body parts or key points in the image with the open pose method, after which it maps the appropriate key points to form pairs.

You must have heard of Mask R-CNN in another context related to deep learning. More specifically, it is a well-known algorithm for many different tasks, such as instance segmentation and object detection. We can extend this architecture to perform HPE tasks as well as top-down approaches. This is achieved in two separate steps, one for part detection and other for person detection, when the outputs of the two are (roughly) combined.

DeepCut is another pose estimation model that takes a bottom-up approach to pose estimation for multiple people. This approach aims to perform object detection and pose estimation simultaneously. DeepCut authors view the process in the following order:

Finally, we have DeepPose, a Deep Neural Network (DNN)-based approach proposed by Google researchers. It captures all visible key points and combines several layers: merge, convolution, and fully connected to achieve a high-fidelity final result.

This method is called the state-of-the-art method because it was the first application of HPE using lots of deep neural network regressors to capture key points.

MediaPipe Pose, an ML solution for high-fidelity body pose tracking, uses BlazePose research to extract 33 3D landmarks and full-body background segmentation masks from RGB video frames, contributing to ML Kit Pose Detection API support.

Now let’s review a few significant use cases and applications of HPE in the healthcare sector.

The physical therapy industry is a use case for human activity tracking with similar usage rules. Especially in this era of telemedicine, home visits have become more flexible and diverse. Here, artificial intelligence technology enables more sophisticated forms of online processing and helps to create more useful apps using HPE.

Regarding the analysis of rehabilitation activities, the usage of HPE is similar to fitness applications, except for the requirement of accuracy. If we are ever dealing with injury and related rehab, HPE can help detect postures and provide users with information about specific physical exercises. Again, the payoffs are:

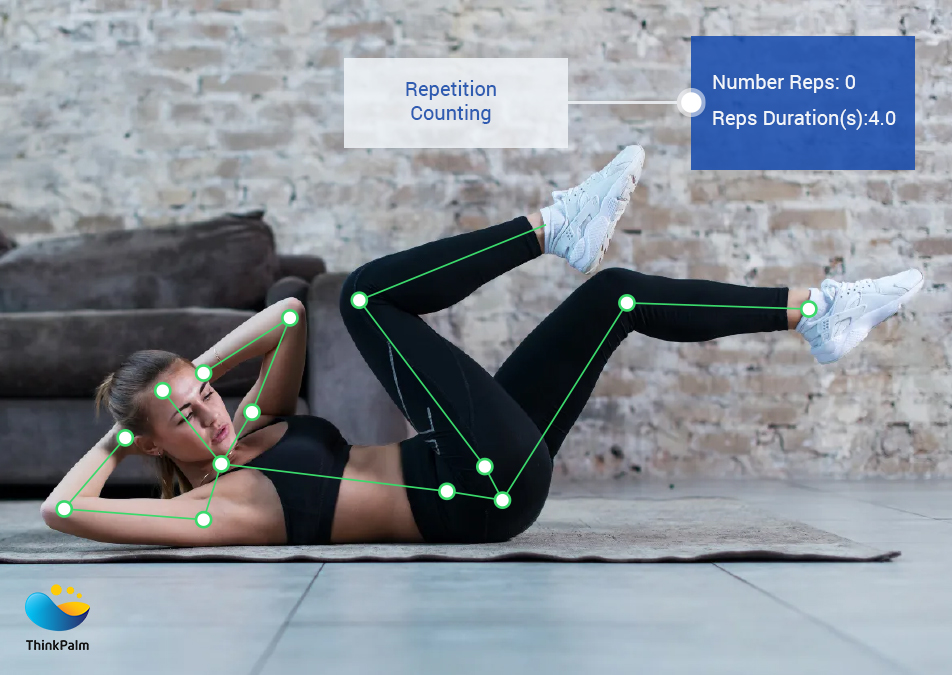

Fitness apps and AI-driven training are some of the most obvious use cases for estimating body postures. A model implemented in a phone app can use a hardware camera as a sensor to record a person’s exercise and analyze it.

Human motion tracking allows movement to be broken down into eccentric and concentric phases to analyze different flexion angles and overall posture. This is done by tracking key points and providing analysis through prompts or graphical analysis. It can be processed in real-time or after a short delay, giving the user an important analysis of movement patterns and body mechanics.

Human pose estimation is a very active area of research. There are many other approaches, and more are likely to emerge in the coming years. This field aims to create systems capable of detecting human poses from images or videos with high accuracy and minimal training data.

The health and safety impact of HPE’s advances is already evident, with many players offering AI-based personal trainers, teletherapy, digital MSK, and virtual rehabilitation support. As a result, the help of modern computer technology has also made it easier and more convenient to create animated characters and superheroes using HPE.

Answer: Human pose estimation (HPE) is a method for detecting and classifying joints in the human body. Basically, it’s a way to capture a set of coordinates for each joint (arm, head, torso, etc.) called key points that can describe a person’s posture. The connection between these points is called a pair.

Answer: Pose estimation is a computer vision technique that predicts and tracks the location of a person or object. This is done by looking at the combination of pose and orientation of a given person/object.

Answer: For doing this step, we should take an image, run the blaze pose model (which we used earlier to create the dataset) to extract the key points of the person in the image, and then run the model on this test case. The model is expected to provide correct results with high confidence scores.

Answer: The input of a pose estimation model is usually a processed camera image, and the output is information about key points. Determined key points are indexed by part ID, including a confidence score between 0.0 and 1.0.

Answer: 6D pose estimation is the task of determining the 6D pose of an object, including its location and orientation. This is an important task in robotics, where the robotic arm needs to know the position and orientation to detect and move nearby objects.

Human pose estimation projects can be tricky and require expertise in several areas.

Are you planning human pose estimation projects or have questions about enabling posture detection in your fitness, physical therapy, augmented reality (AR), or other applications? Think smart; ThinkPalm has got you covered!

Connect with our experts today, and we can help you tackle your challenges with state-of-the-art AI services.

Also, check out our case study on how we helped our client digitally transform their rehabilitation program using Artificial Intelligence (AI).