The modern-day innovations combine state-of-the-art technologies and knowledge about how the brain produces speech to tackle an important challenge faced by many patients. Researchers have combined several advances in machine learning to interpret the patterns of activity in the brain to find out what someone wants to say even if they’re physically unable to speak.

Let us take Stephen Hawking as an example.

Hawking had a form of motor neuron disease that paralyzed him and took away his verbal speech, but he continued to communicate using a synthesizer. Hawking initially communicated through the use of a handheld switch, and eventually by using a single cheek muscle. This is possible for Hawking because he can select words manually and trigger words.

This is not possible for other individuals suffering from ALS, Locked-in syndrome, or stroke, as they won’t have the ability to control a computer. Now many researchers have come closer to the goal of helping those patients ‘A Voice for the Voiceless’.

But when we capture individuals’ thoughts to speech it will have some privacy issues. So if we use brain patterns while a patient is listening to a speech it will be a great success.

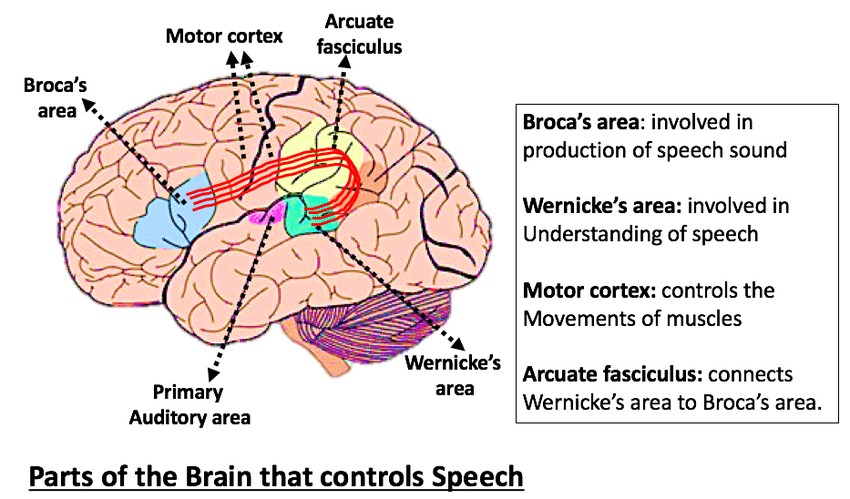

Brain-computer interfaces and translate the brain patterns into machine commands. The auditory cortex is the area of the brain that’s activated when we speak and listen, also when we think, speech can be reconstructed from the human auditory cortex, which helps the possibility of a speech neuroprosthetic a direct communication with the brain. Patients’ auditory cortex activities are tracked as they listen to sentences read aloud by a variety of people.

A vocoder is a computer algorithm. Brain patterns from the auditory cortex will be decoded by the AI-Vocoder to reconstruct speech. The voice from the vocoder is trustable.

This Robo-voice could understand and repeat about 75 % of the time. For studies, patients listened to readers read from zero to nine. And the brain patterns were fed into AI-enabled Vocoder for the speech, thus results in a Robo voice. So the listener can correctly identify the spoken digits, it can even tell whether the reader is a male or female!

But there are certain limitations such as overlapping between the brain areas for hearing and speaking so that there will be differences in the response brain signals. Another limitation is, this system is patient specific. All individuals produce different brain signals while listening to speech. Several researches are ongoing to overcome these limitations to find a decoder generally for all individuals. No doubt, we can hopefully wait for an AI-enabled Vocoder that produces the voice for the voiceless.

For a free consultation on IoT, Enterprise or Telecom Service, contact us at sales@thinkpalm.com