Docker is a software containerization platform, with which you can build your application, package them along with their dependencies into a container and then these containers can be easily shipped to run on other machines. These containers use Containerization which can be considered as an evolved version of virtualization. The same task can also be achieved using virtual machines, however, it is not very efficient.

Generally receive a question at this point, i.e. what is the difference between Virtualization and Containerization?

Virtualization is the technique of importing a guest operating system on top of a host operating system. This technique was a revelation at the beginning because it allowed developers to run multiple operating systems in different virtual machines all running on the same host. This eliminated the need for extra hardware resources.

The advantages of Virtual Machines or Virtualization are:

• Multiple operating systems can run on the same machine

• Maintenance and Recovery were easy in case of failure conditions

• The total cost of ownership was also less due to the reduced need for infrastructure

Virtualization also has some drawbacks, such as running multiple virtual machines in the same host operating system that leads to performance degradation. This is because of the guest OS running on top of the host OS, which will have its own kernel and set of libraries and dependencies. This takes up a large chunk of system resources, i.e. hard disk, processor and especially RAM.

Another problem with Virtual Machines which uses virtualization is that it takes almost a minute to boot-up. This is very critical in the case of real-time applications.

• Running multiple Virtual Machines leads to unstable performance

• Hypervisors are not as efficient as the host operating system

• Boot up process is long and takes time

These drawbacks led to the emergence of a new technique called Containerization.

Containerization is the technique of bringing virtualization to the operating system level. While virtualization brings abstraction to the hardware, containerization brings abstraction to the operating system. Do note that Containerization is also a type of virtualization.

Containerization is however more efficient because there is no guest OS here and utilizes a host’s operating system, share relevant libraries & resources as and when needed, unlike virtual machines. Application-specific binaries and libraries of containers run on the host kernel, which makes processing and execution very fast. Even booting-up a container takes only a fraction of a second, because all the containers share, host operating systems and holds only the application related binaries & libraries. They are lightweight and faster than Virtual Machines.

• Containers on the same OS kernel are lighter and smaller

• Better resource utilization compared to VMs

• Boot-up process is short and takes a few seconds

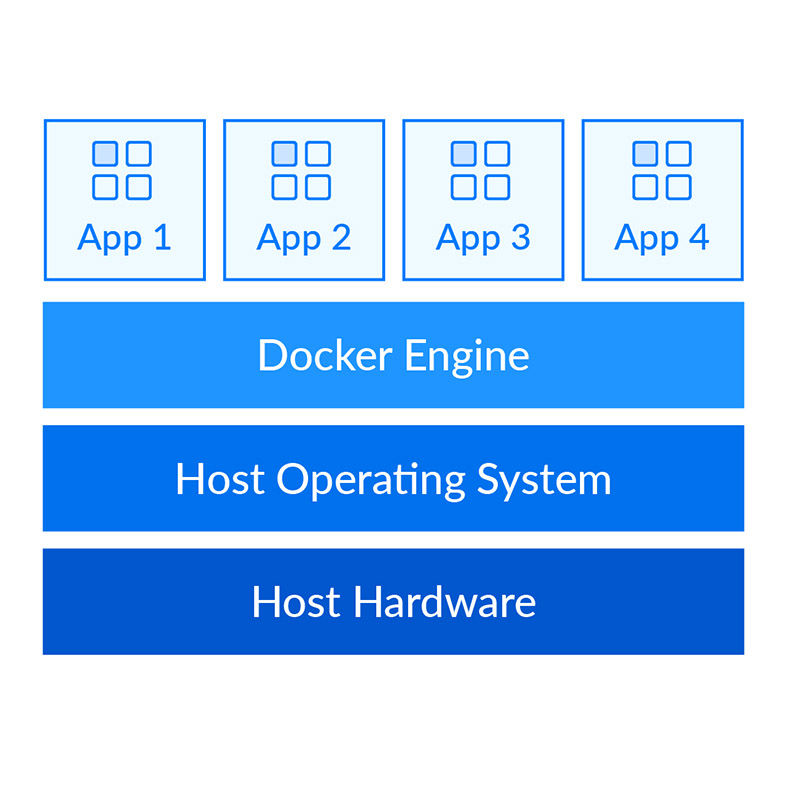

There is a host operating system which is shared by all the containers. Containers only contain application-specific libraries that are separate for each container and they are faster and do not waste any resources.

All these containers are handled by the containerization layer which is not native to the host operating system. Hence software is needed, which can enable you to create & run containers on your host operating system.

Docker is a containerization platform that packages your application and all its dependencies together in the form of Containers to ensure that your application works seamlessly in any environment.

Each application will run on a separate container and will have its own set of libraries and dependencies. This also ensures that there is process level isolation, i.e., each application is independent of other applications, giving developers surety that they can build applications that will not interfere with one another.

Each application will run on a separate container and will have its own set of libraries and dependencies. This also ensures that there is process level isolation, i.e., each application is independent of other applications, giving developers surety that they can build applications that will not interfere with one another.

A developer can build a container that has different applications installed on it and give it to the QA team who will only need to run the container to replicate the developer environment.

Now, the QA team needs not install all the dependent software and applications to test the code and this helps them save lots of time and energy. This also ensures that the working environment is consistent across all the individuals involved in the process, starting from the development to deployment. The number of systems can be scaled up easily and the code can be deployed on them effortlessly.

Virtualization and Containerization both let you run multiple operating systems inside a host machine.

Virtualization deals with creating many operating systems in a single host machine. Containerization, on the other hand, will create multiple containers for every type of application as required.

The major difference is that there are multiple Guest Operating Systems in Virtualization which are absent in Containerization. The best part of Containerization is that it is very lightweight as compared to the heavy virtualization.

Docker is designed to benefit both Developers and System Administrators, making it a part of many DevOps toolchains. Developers can write their code without worrying about the testing or the production environment and system administrators need not worry about infrastructure as Docker can easily scale up and scale down the number of systems for deploying on the servers.

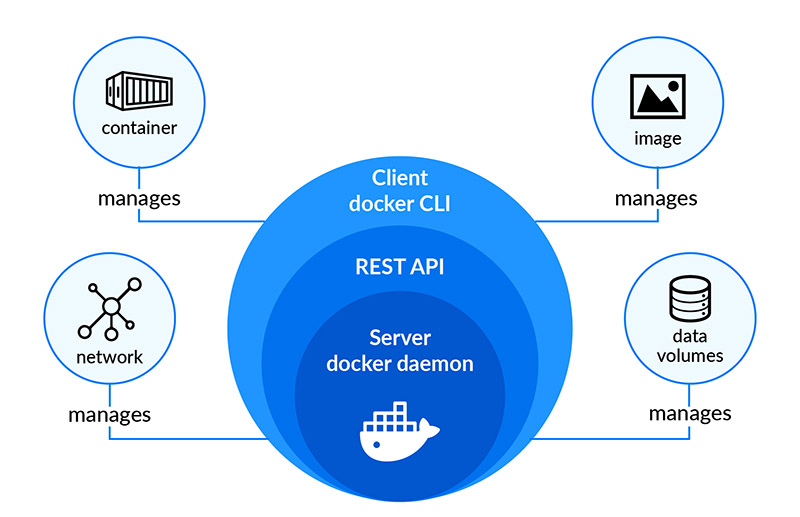

Docker Engine (the heart of the Docker system) is simply the docker application that is installed on your host machine. It works like a client-server application which uses:

• A server which is a type of long-running program called a daemon process

• A REST API that specifies interfaces that programs can use to talk to the daemon and instruct it what to do.

• A command-line interface (CLI) client (the docker command).

Docker Image can be compared to a template that is used to create Docker Containers. They are the building blocks of a Docker Container. These Docker images are created using the build command. These Read-only templates are used for creating containers by using the run command.

Docker lets people create and share software through Docker images. Also, you don’t have to worry about whether your computer can run the software in a Docker image — a Docker container can always run it.

Containers are the ready applications created from Docker Images or you can say a Docker Container is a running instance of a Docker Image and they hold the entire package needed to run the application. This happens to be the ultimate utility of Docker.

Docker Registry is where the Docker Images are stored. The Registry can be either a user’s local repository or a public repository like a Docker Hub allowing multiple users to collaborate in building an application. Even with multiple teams within the same organization can exchange or share containers by uploading them to the Docker Hub. Docker Hub is Docker’s very own cloud repository similar to GitHub.

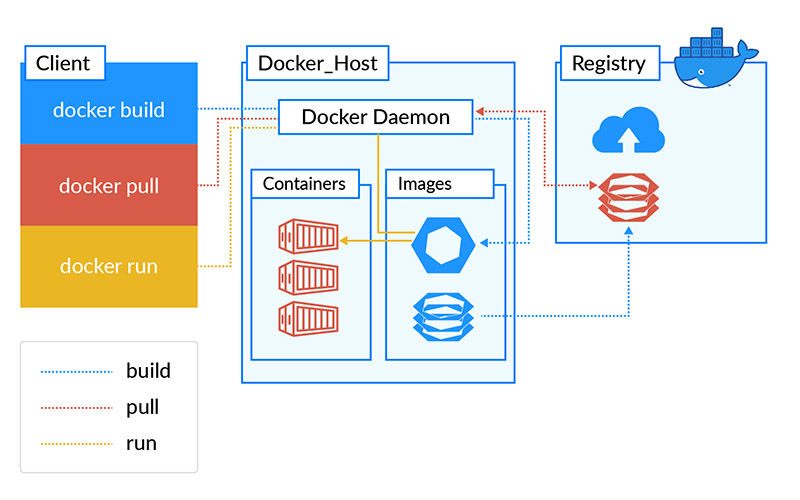

Docker Architecture includes a Docker client – used to trigger Docker commands, a Docker Host – running the Docker Daemon and a Docker Registry – storing Docker Images. The Docker Daemon running within Docker Host is responsible for the images and containers.

• To build a Docker Image, we can use the CLI (client) to issue a build command to the Docker Daemon (running on Docker_Host). The Docker Daemon will then build an image based on our inputs and save it in the Registry, which can be either Docker hub or a local repository

• If we do not want to create an image, then we can just pull an image from the Docker hub, which would have been built by a different user

• Finally, if we have to create a running instance of my Docker image, we can issue a run command from the CLI, which will create a Docker Container.

Docker Compose is basically used to run multiple Docker Containers as a single server. Let me give you an example:

Suppose if I have an application that requires WordPress, Maria DB and PHP MyAdmin. I can create one file which would start both the containers as a service without the need to start each one separately. It is really useful especially if you have a microservice architecture.

Docker Swarm provides native clustering functionality for Docker containers, which turns a group of Docker engines into a single virtual Docker Engine. In Docker 1.12 and higher, Swarm mode is integrated with Docker Engine. The swarm CLI utility allows users to run Swarm containers, create discovery tokens, list nodes in the cluster, and more.

In simple words, Docker can be used for fast, consistent delivery of your applications!

Docker streamlines the development lifecycle by allowing developers to work in standardized environments using local containers that provide your applications and services. Containers are great for continuous integration and continuous delivery (CI/CD) workflows.

Have a look at the following scenario:

• Your developer writes a code locally and share their work with their colleagues using Docker containers.

• They use Docker to push their applications into a test environment and execute automated and manual tests.

• When developers find bugs, they can fix them in the development environment and redeploy them to the test environment for testing and validation.

• When testing is complete, getting the fix to the customer is as simple as pushing the updated image to the production environment.

Containers and Dockers have the potential to build innovative possibilities for several industries and enterprises. Contact us today if you are looking to learn more about how dockers can help your business!