Virtual Machines (VMs) and Containers differ only on few dimensions; while VMs provide a virtualized environment to run multiple OS instances (see figure a), containers provide a method to isolate applications and act as a virtual platform for more applications (see figure b). Containers offer a way to virtualize an OS enabling multiple workloads to run on a single OS instance while in VMs, the hardware is virtualized to run multiple OS instances. Containers’ agility, portability and their speed make them yet another tool used for streamlining software development.

Within the container, an underlying OS provides the basic services to all the containerized applications. Virtual-memory support is employed for isolation. A hypervisor runs VMs that have their own operating system using hardware VM support.

A hardware VM system forces certain communication with a VM to go through the hardware. The VM’s device driver is thus made capable of handling the hardware directly, by mapping the real hardware to a virtual machine’s environment. For instance, an ethernet adapter, which is a single hardware device can present numerous virtual instances of itself through hardware I/O virtualization. This helps multiple virtual machines manage their instances directly.

VMs are managed by a hypervisor and use the VM hardware (a), while the container systems provide the operating system services from the underlying host, while isolating the applications that use virtual-memory hardware (b).

VMs are an abstract machine that makes use of the device drivers targeting it, while a container provides an abstract operating system. A para-virtualized VM environment will provide an abstract hardware abstraction layer. Applications that are running in a container environment will share an underlying operating system, while VM systems can run different operating systems. Typically a VM hosts multiple applications that might change over time while a container normally has a single application. It’s however possible to have a fixed set of applications in a single container.

Docker is an open platform for developing, shipping, and running applications. It enables you to keep your applications separated from your infrastructure so that you can deliver software quickly. With Docker, you can manage your infrastructure the same way you manage your applications. By making use of Docker’s methodologies for shipping, testing and deploying code quickly, you can considerably minimize the delay between writing the code and running it in production.

Docker gives the ability to package and run an application within a loosely isolated environment known as a container. The isolation and security will allow you to run many containers at the same time on a given host. Containers are usually lightweight because they do not need the extra load that a hypervisor has, but run directly in the host machine’s kernel. This means that you can run several containers on a given hardware combination, unlike virtual machines. Host machines, that are virtual machines in reality, can run docker containers within them!

Docker also equips you with a platform and tools to manage the life cycle of your containers:

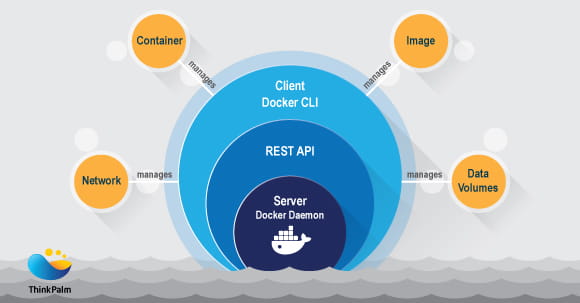

Docker Engine is a client-server application having these major components:

The docker daemon is managed through scripts or CLI commands generated by the REST API. Several Docker applications use the underlying API and CLI. Multiple docker objects such as containers, networks, images and more are created and managed by the daemon.

Note: Docker is licensed under the open source Apache 2.0 license.

Docker streamlines the software development life cycle by providing a standardized environment for developers through local containers that provide apps and services. Containers are suitable for continuous integration as well as continuous delivery (CI/CD) workflows.

Docker’s container-based platform allows highly portable workloads. Docker containers can run on multiple environments, including local laptops, cloud providers, data centers and more. The portability and lightweight nature of Docker, make it easy to dynamically manage workloads, scaling up or tearing down applications and services according to your business requirements, in near real time.

As Docker has the characteristic of being lightweight and fast, it provides a viable, cost-effective alternative to hypervisor-based virtual machines. This allows more compute capacity to achieve business goals. Docker is fully suitable for high density environments and for small and medium deployments, especially in cases where you might need to do more with fewer resources.

Docker uses a client-server architecture. Docker client communicates with the Docker daemon, which builds, runs and distributes Docker containers. You can either run the Docker client and daemon within the same system, or connect to a Docker daemon through a Docker client. The Docker client and daemon communicate with a REST API, through network interface/UNIX sockets.

Docker daemon, called as dockerd, requests and manages Docker objects such as containers, networks, images and volumes. A daemon can also communicate with other daemons for managing Docker services.

The Docker client (docker) is the primary way employed by many Docker users to interact with Docker. When you use commands such as docker run, the client sends these commands to dockerd, which in turn, carries them out. The docker command uses the Docker API. A certain Docker client can communicate with more than one daemon.

A Docker registry stores docker images. Docker Hub and Docker Cloud are public registries that can be availed by all. By default, Docker is configured to search for images on Docker Hub registry. If need be, you may even run your own private registry. Docker Datacenter (DDC) includes Docker Trusted Registry (DTR) as well.

During the docker pull or docker run commands, the images required are pulled from this configured registry. When you use docker push command, your image is pushed to the configured registry.

Docker store allows you to buy and sell Docker images or even distribute them for free. For example, you can buy a Docker image that contains an application or service from a software vendor and use that image to deploy the application into your testing/staging/ production environments. You can also upgrade the application by pulling the latest version of the image and also by redeploying the containers.

When you are using Docker, you create and use images, containers, networks, plugins, volumes and a number of other objects. Here’s a gist of the objects:

A docker image helps to create a docker container. It contains instructions and is read-only. Often, an image is based on another docker image and also includes additional customization. For example, an ubuntu based image can be run on Apache web server, but you need the configuration details for running your application.

You may create your very own images or you may only use those created by others which are published in a registry. To build your own image, you can create a Dockerfile with a simple syntax for defining the steps needed to create that particular image and run it. Each instruction in a Dockerfile creates a layer in the image. When you make a change in the Dockerfile and rebuild an image, only those layers that have changed are usually rebuilt. This is the exact reason why docker, compared to other virtualization technologies, makes images fast, lightweight and small.

A container is a runnable instance of an image. One may create, start, stop, move, or even delete a container using the Docker API or CLI. Further, it is possible to connect a container to one or more networks, attach a storage to it as well as create a new image based on the current state of it.

By default, a container is well isolated from other containers as well as its host machine. You can also control the level of isolation of a container’s network, storage or other underlying subsystems from other containers and the host machine.

A container is defined by its image as well as other configuration options that you provide as you create or start it. When a container is removed, any changes to its state, which are not stored in persistent storage, will disappear.

Services give you the ability to scale containers across multiple Docker daemons that work together as a swarmalong with multiple managers and workers. Each member of a swarm is a Docker daemon, and all these daemons communicate using the Docker API. Using services, you can define the desired state such as the number of replicas of the service that should be available at any given time. By default, the service is load-balanced across all worker nodes. To the consumer, the Docker service appears to be a single application. Docker Engine supports swarm mode in Docker 1.12 and also higher versions.