An Introduction to DevOps

DevOps is a collection of practices that automates the processes connecting software development and IT teams so that they can test, build, and release software quicker and more assuredly. The notion of DevOps is established on developing a culture of collaboration among teams that traditionally functioned in relative siloes. Implementing a modern architecture of working together and not under different siloes, where these two teams are merged into a particular team and work across the complete application lifecycle, from development and analysis to deployment and operations.

In some DevOps models, quality support and security spans may also display a more tight integration with development and procedures and throughout the application lifecycle.

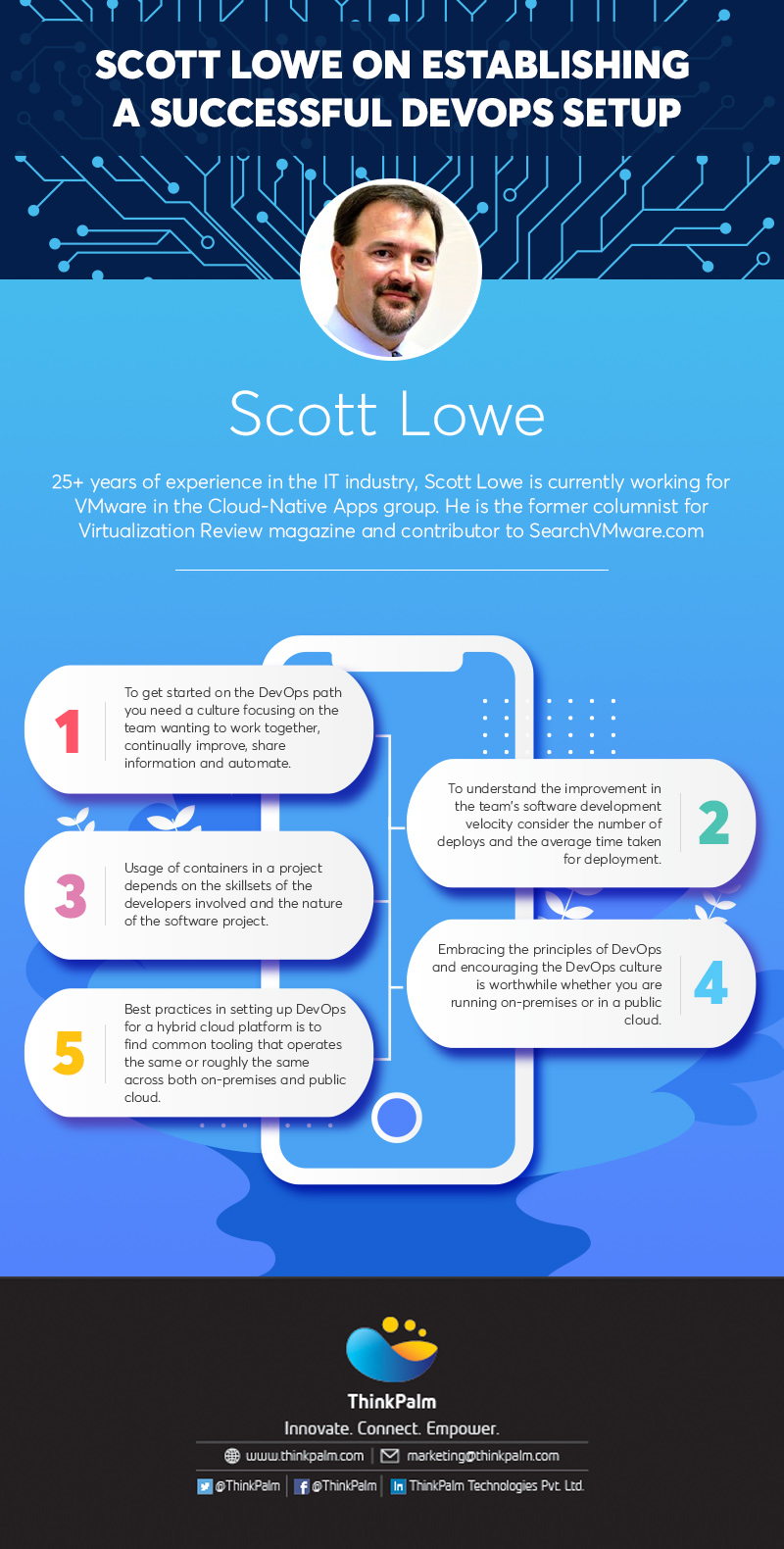

Let’s find out what Mr. Scott, an expert in DevOps has to say about establishing a successful DevOps setup…

1. What should be the priorities/strategies while transitioning a team to DevOps?

My advice to those who are looking to transition a team or to get started on the DevOps path by themselves is to focus on culture (or mindset). You need to have a culture focused on working together, continually improving, sharing information, and automating as much as possible. There is no harm in adopting tools to increase automation or to streamline processes, but if you don’t have a culture of leveraging those tools to continuously streamline processes and improve them, I don’t think you will be ultimately as successful as you might be otherwise.

2. What are the KPIs that we need to evaluate to ensure a successful DevOps setup?

KPIs are meaningful only if they are organization-specific, but in general, you might want to look at the number of deploys and/or the average time taken to conduct a deployment. Analyzing a team’s performance with regards to how often they deploy or how much of an improvement there is in deployment time from before you begin the transition to afterward, and continuously monitoring them along the way would give you a rough idea of the improvement in the team’s software development velocity. To gain or maintain a competitive advantage in the market, many organizations are striving to improve these metrics when it comes to their internal software or the software they are delivering to customers. You could also look at things like defect counts and signals from the CI/CD system testing or unit testing but these are more indicators of quality than software development velocity. Ultimately, you will have to determine what metrics matter most for your teams and your organization.

3. When should we use DevOps and containerization in a project? Does it depend on the size/cost of the project, agile nature or architecture?

I would decouple DevOps and containerization because I don’t believe they are inherently connected. Containers are tools you can use in a software development process, but you could have developers using containers in a software development process and still not embracing the principles and culture behind DevOps. So let’s look at them separately.

When you should use DevOps depends on your organization. If you are trying to build an organization that embraces DevOps principles, then the answer would be all the time! You should build a culture of automating processes, being lean and efficient, using metrics to determine your performance, and sharing information freely and broadly. If you are trying to take a team down the DevOps path, you will have to take the team down the path all the time.

The usage of containers in a project depends on the skillsets of the developers involved and the nature of the software project they are working on. In my opinion, adopting technology for technology’s sake makes no sense. If the application being worked on is a monolith, doesn’t scale well horizontally, or has tightly coupled components, maybe using containers isn’t the best approach. This might also be true if an organization has significant experience and expertise with infrastructure as code and configuration management tools to help automate many aspects of their infrastructure and environment. In that case, it might be a disservice to try and force the organization to use containers where it can’t take advantage of its existing skillsets and deliver value to its customers. If the application is modular, has components that need to scale independently, and can lend itself to be packaged as a container and deployed using container orchestration systems like Kubernetes, you should go ahead and use containers.

4. Do you find value in setting up on-premises DevOps? Is it a technology mainly focused on the cloud?

I would say let’s decouple the idea of DevOps from the underlying tool chains that you use because DevOps is first and foremost about the culture (or mindset). There is certainly value in cultivating a DevOps culture when your software development tool chain is primarily on-premises, but it may come with its share of challenges. In many cases, on-premises environments will not have the comprehensive APIs that are available in the public cloud, making it difficult to adopt some of the automation tool chains that normally come after building the DevOps culture in your team. But embracing the principles of DevOps and encouraging the culture that DevOps advocates is absolutely worthwhile whether you are running on-premises or in a public cloud.

When it comes to the toolchains, you will generally find that tools tend to work a little better in the public cloud because they have evolved from the ground upon the assumption that there are APIs that can be called for automation. Look at tools for doing infrastructure as code or container orchestration platforms like Kubernetes, which make assumptions on the availability of certain features of the infrastructure on which it is running. In such cases, it is after you have gone down the path of cultivating the culture that you will start looking at the toolchains and processes that you are going to use – tools that need to be adopted to make things work for on-premises, public cloud or those that give cross over functionality across both environments.

5. What are the best practices in setting up DevOps for a hybrid cloud platform?

There are a few best practices. One would be trying to find common tooling that operates either the same or roughly the same across both on-premises and public cloud. You could also wrap that into processes. For example, ask yourself if there are ways you can create processes leveraging tools that operate the same in public cloud and on-premises environments. One reason why people find Kubernetes attractive is because it is agnostic of the underlying infrastructure. If you are deploying applications onto Kubernetes running on AWS, you are talking to Kubernetes API. If you are deploying applications onto Kubernetes running on on-premises using a hypervisor like vSphere or KVM, you are still talking to the Kubernetes API. While there are minute differences in how Kubernetes interacts with the underlying infrastructure, by and large, app deployment and CI/CD talks exclusively to Kubernetes and does not depend on the underlying infrastructure. This gives you flexibility in creating processes using Kubernetes to provide a relatively seamless or invisible process that looks the same whether you are deploying applications on-premises or the public cloud. There are other tools that you could look at apart from technologies like Kubernetes but why people prefer Kubernetes over others is because it provides an abstraction layer that enables hybrid environment functionality more easily than others.

6. When it comes to scaling up or managing technology changes to an existing DevOps setup in enterprise projects, what are the aspects that we need to consider?

I do not know if there is a generic answer for that as it is going to depend on the software, tools and processes that you build or select as a part of transitioning your team in support of a DevOps culture (or mindset).

When using containers and container orchestration like Kubernetes, it would involve understanding the scaling points for Kubernetes, scaling the control plane, understanding the failure modes of the control plane, scaling the number of worker nodes and workloads, the actual containers being deployed on those container nodes and beyond. It may involve the use of specific features called taints and tolerations that would help optimize the scheduling of workloads to make the most effective use of the underlying hardware. Again it depends entirely on the tools being used.

If your organization is working on an application that is not amenable to containerization and it makes more sense to run it on EC2 instances, it might involve looking at tools used to build and optimize AMIs for AWS, the infrastructure as code components used to deploy the instances, or tools used to optimize the code so that it deploys more quickly and more. You should consider the patterns that you are using with your infrastructure as code on top of things like patching existing instances – whether you would want to upgrade your existing instances running a configuration management tool against them or are you looking at deploying entirely new ones in an immutable infrastructure approach. There are a lot of factors that determine how you optimize either your tools or processes or the combination of tools and processes.

7. What are the things to consider while managing multiple Kubernetes cluster, how can we manage them effectively?

There are a couple of different aspects to consider. Generally speaking, there will probably be a cluster operator team to manage the cluster itself including the cluster capacity, performance, availability, and resiliency. This team will need to make sure that the control plane is working, nodes are functioning, integration of the underlying cloud platform is working as expected, and the components of the cluster – logging, monitoring, ingress, load balancing and more – are getting deployed correctly. It would be like infrastructure management, ensuring that the cluster is available, up, running efficiently, not experiencing errors and more.

The other aspect is when you want to deploy applications onto these clusters and that’s when it gets complex in a different way than managing the clusters themselves. You have to analyse whether the application would benefit from horizontal scaling of its components, where to run the different tiers of the application (if it has multiple tiers), which components of the app will get deployed in a particular cluster, how should you use Kubernetes Service objects that help components spread across clusters to work together, how to configure Kubernetes Ingress objects to enable traffic to flow inbound into a cluster, external load balancing solutions to spread traffic across clusters containing app components, and more. I have seen certain organizations build bespoke systems that help them with these aspects but I haven’t come across a generic system that attempts to address all these use cases.

8. What are the various security aspects to consider to secure kubernetes cluster?

Security is a whole topic in itself but one of the major areas to think about is cluster configuration. This include aspects like Network Policies within the cluster to ensure that pods are communicating with each other in an expected manner, or Pod Security Policies to ensure that pods are not escalating their permissions or running as the root user. These are configuration settings that happen on a per-cluster basis. You might have different settings between production and staging and have multiple clusters in production. Not only do you have to ensure that settings are correct and consistent across your multiple clusters, but also ensure that all clusters in production have the same setting.

Then there is the aspect of application security itself. You should ensure that your the team is taking appropriate steps to secure the software development life cycle. Container image security and vulnerability scanning are critical. Take a look at your container image, identify the base image that you are using, analyze the security vulnerabilities associated with it and ensure these images are safe to use.

Authentication and authorization should be considered at the application end and cluster end. From the application perspective, analyse whether your app components are communicating with each other in the right way, whether service A is talking to service B and if it is actually service B that it is communicating with and not some impersonated service. From the cluster perspective, analyze how the users authenticate to the cluster and control what they are allowed to do in a cluster (via role-based access control, known as RBAC). You should also consider network security on the underlying infrastructure on which the cluster is running, application layer security if you are running web based applications, HTTP based attacks on web based applications, malformed requests and more. There’s a lot to be considered.

Mr. Scott Lowe is a subject matter expert on DevOps and has been in the IT field for more than 25 years. He currently works for VMware in the Cloud-Native Apps group. Here Mr. Scott is giving insights on how to establish a successful DevOps setup and the things that should be considered in achieving this.

Conceptualized by:

Aarathy Jayakrishnan

Aarathy Jayakrishnan

Aarathy worked as a Digital Marketing analyst at ThinkPalm. She is passionate about social media marketing, content marketing and SEO. Her hobbies include reading and dancing.