Here are some interesting facts regarding mobile usage across the globe. The world’s population, presently, is estimated at 7.4 billion, of which 5.5 billion people own a cell phone! And today, an ever-growing percentage of these devices are smartphones. According to a recent Pew Research Center Study, the number of users accessing the internet on their smartphones has more than doubled in the past five years, as has the number of users downloading and using mobile apps! Of those who use the internet or Email on their phones, more than a third go online primarily through their handheld devices.

Why should we conduct mobile app performance testing?

If we look at the 2013 mobile commerce statistics, 66% of the shoppers abandon their transactions. 50% of those abandoned transactions are due to poor performance! As miniscule as 1 second delay equals a 7% drop in conversions, that is critical for high volume retail and results in$24.5 Billon loss. The 2015 Jumio Mobile Consumer Insights Study reveals that more than one-half of U.S. smartphone owners (56%) have abandoned a mobile transaction. The top two reasons why users abandon a purchase or account registration are slow loading times (36%) and difficulty with navigating the checkout process (31%).

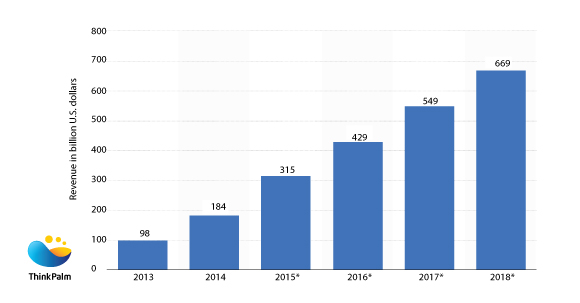

Now, let us go through the worldwide mobile retail commerce revenue 2013-2018 (in billion U.S. dollars)

In 2014, the global mobile e-commerce revenue amounted to 184 billion U.S. dollars and is projected to reach a whopping 669 billion U.S. dollars in 2018! I believe the statistics help you understand why the performance of mobile apps is so important for your business!

How is mobile app performance testing different from others?

It is interesting to note that the expectations of mobile users are the same as those using traditional web sites! They don’t expect the response time to be any worse because they are accessing resources on their smartphones and tablets. Users always have a choice to abandon your site for a competitor, if they don’t believe that the performance is up to the standard.

There are lots of ways by which we can improve the performance. I don’t believe in “best practices”. Best practices to me are ways to do a task that surely can improve the mobile app performance. I do believe in guidelines and better approaches for a given situation. I want to make it clear that what we will be discussing are measures I found to be successful at my work, ideas and approaches that I have learned by communicating with others, articles I have read, sessions I have attended. And please don’t apply these approaches blindly at work. Think about them, incorporate them into your existing body of knowledge, understand how they do or don’t apply to your context, and challenge them. The key step is to contribute your thoughts, findings and experiences to the community!

Most importantly, how can I adapt mobile performance testing in our testing phase?

We need to understand how users will access our systems. Assessing the users profile and getting the user workload distribution right have always been huge factors in gaining value from performance testing. These are the inputs to test in the test environment to gain value from the result. There are certain criteria that are important from a mobile perspective, which we need to focus on – Measurement of

1. User profile

2. Load Profile

While measuring the user profile, we have to determine the business processes, native app, mobile site, full site , preferred browser network conditions, geographical location and so on.

When we consider the Load profile, we must evaluate the volume of users by business process, the location volume of users by access type Frequency, attain constant feedback from application logs, keep a continuous feedback loop and employ other monitoring solutions. Most of the organizations have updated the application laws and other analytical solutions to evaluate in just test input over time. This is especially important for mobile, given the rate of change in mobile technology and the inherent diversity.

How we can measure the device performance?

Of course our app can affect the performance of the device! After running the tests, it will be clear that the performance out of each device is different and our application should be compactable with all devices and operating systems. At a minimum, we should measure and track the app’s usage of CPU, memory, Input and output transaction response, battery drain and storage. Also, we can include automation tools to measure device performance such as TouchTest (SOASTA), TRUST (Mobile labs), MonkeyTalk (Gorilla Logic).

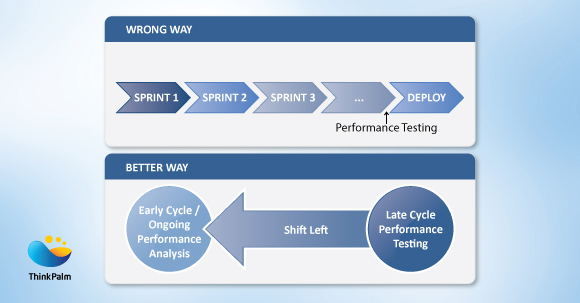

If we look at an agile environment, I have noticed organizations trying to address the same approach of performance testing at the end of handful sprints. And the realities are delayed release, facing high risks, etc. At the end of the sprint, we will realize way too late, that there might be performance issues! I’m sure you would agree that performance issues, if found at a later stage, are much more likely to have major design architectural impact and other functional type issues. So there definitely is a benefit in understanding performance issues early in the cycle.

Better way:

So we need to find another way and the way to do that is really look at the ultimate goal; to shift from late cycle performance testing to early cycle! We should go for performance analysis and use the analysis intentionally because it is really more than just performance testing.

It would be better if we can include performance analysis practice in each sprint. Obviously performance comes under a non-functionality requirement. Most of the stake holders will not be bother about the performance requirement at the starting stage of the sprint. Performance objectives aren’t part of user stories, agile teams lack performance engineering expertise, APM (Application Performance Management) tools / skills are non-existent, that will stop us from analyzing performance continuously.

To fill these gaps, we should create or begin developing a performance focused culture that capture non-functional requirements at the earlier stage of each sprint, make performance everyone’s job and create a role to coordinate performance activities.

Begin some practices as incorporating performance requirements as acceptance criteria for existing user stories.

For example:

(i)For all steps in the product configuration, set up a process that will have a response time of, say, less than 2 seconds.

(ii)Set up an expected load point. For example, 1,500 dealers are concurrently using the system to configure products for customer orders. So the system will respond in less than 2 seconds for all steps in the product configuration process for a maximum of 1,500 concurrent users configuring orders.

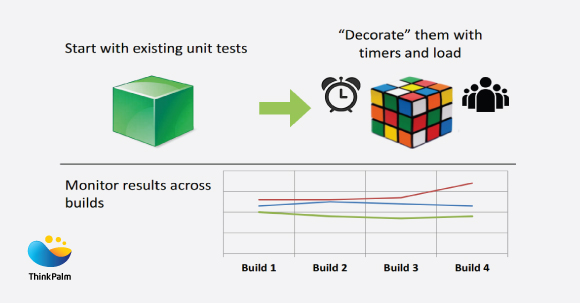

When we implement performance testing in agile, we have to incorporate performance testing / analysis, Unit performance testing, component / system level performance testing into each sprint and also include performance testing in the Continuous Integration chain in each build.

When we move into component and system level testing phases,

1.We should map user stories onto system components

2.Adapt/adopt a benchmark in development / QA environment

3.Look for trends in measurements vs. absolutes

4.Use service virtualization for 3rd party or yet to be developed components

5.Incorporate as part of the Continuous Integration chain

6.Harden scripts against application changes

At the end of each performance testing stage, begin a habit to keep in mind the lesson learned. Because next time, the trend might get changed. And add performance tests in continuous integrations. Custom plug-ins exist to run performance tests as part of the continuous chain, are very useful. People get mad when you unnecessarily break the build!

(i) Allow/ Set up for non-production-like environment

(ii) Set your response time tolerances and client timeouts accordingly

(iii) Add error handling and logging to scripts to enable quick failure investigation

Finally, it is very important that we consider the following:

-

Mobile users are not the same as connected users. We should understand the fact that the usage patterns and mobile devices inherently impact the performance of the system

-

Performance is not limited to front end or network. Back-end performance is critical and if we don’t have unlimited resources, we need to understand that it is going to impact our applications as well.

-

Finally we need to start pushing performance analysis to the left the development cycle. Finding out at the end of the development cycle, just before releasing to the user community, that we have performance issues obviously is not the ideal time! We need to shift end of cycle performance test to ongoing performance analysis.