Virtual Reality, Augmented Reality and Mixed Reality are all terms used interchangeably in many scenarios. But they have inherently different meanings in the realm of understanding these technologies. In this blog we cover the following:

- Difference between VR, AR and MR

- Displays and some of the trackers used

- Processing techniques and representation

- Microsoft Hololens

- Developing apps for Windows Mixed Reality

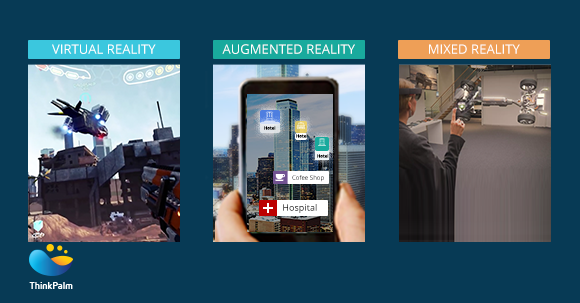

Virtual Reality:

VR aims to make you feel completely immersed in another world and blocks everything else out. It is a total virtual environment with none of the reality visible. The user is placed is in a completely different space from the actual location. The space is either computer generated or captured and video-recorded, entirely occluding the user’s actual surroundings.

VR technologies usually use compact, opaque head-mounted-gears.

Augmented Reality:

AR is any sort of computer technology that overlays data on top of your current view, while continuing to let you see the world around you. Along with visual data, you can augment sound too. A GPS app that narrates directions to you as you walk could technically be an AR app. Augmented Reality is the integration of digital or computer generated information with the real environment in real time. Unlike virtual reality which creates a complete artificial environment, augmented reality uses the existing real environment and overlays new information on top of it.

Augmented reality headsets overlay data, 3D objects and video into your otherwise natural vision. The objects need not be real-world emulations necessarily. For example, while hovering a text in a vision, the text is not an emulation of real-world object, but it is useful data.

Mixed Reality:

Mixed Reality augments the real world with virtual objects as if they are really placed within the real environment. The virtual objects lock their positions according to their real world counterparts so as to produce a seamless view (e.g. placing a virtual cat or a ball onto a real table and having the cat or ball remain on the table while we walk around the table, viewing it from different angles). Mixed Reality is all that is in between fully real-projections and completely virtual projections.

Mixed reality works towards seamless integration of your augmented reality with your perception of the real world. That is, juxtaposing “real” entities together with “virtual” ones.

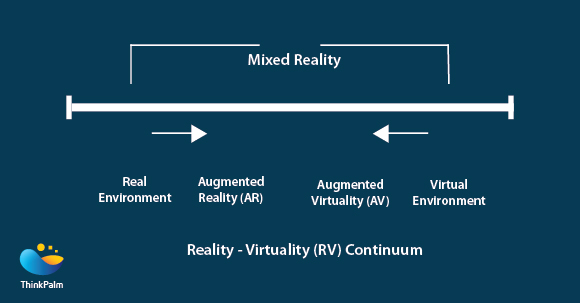

In the 1994 research paper by Paul Milgram and Fumio Kishino entitled “A Taxonomy of Mixed Reality Visual Displays”, one of the earliest references of Mixed Reality appears. They define “the virtuality continuum” also known as “Reality-Virtuality (RV) continuum”.

Real Environment describes views or environments containing only real things, it does not contain any computer generated items. This includes what is observed through a conventional video display of a real scene and direct viewing of the same real scene through or without a plain glass. Virtual Environment describes views or environments containing only virtual objects or computer generated objects. The whole environment is virtually created and does not contain any real object viewed directly or through a camera. A computer graphic simulation of an aeroplane is an example for virtual environment. Mixed Reality is defined as an environment in which real world and virtual world objects are presented together within a single display. It is anywhere between the fully real and fully virtual environments i.e, the extrema of the virtuality continuum.

Augmented Reality is where virtual objects are brought into the real-world view, like a Heads-Up-Display (HUD) in a flight windscreen.

Augmented Virtuality involves environments where a virtual world has certain real world elements within it, like having a visual of your hand in a virtual environment or being able to view other people in the room, inside the virtual environment.

Classification based on Display Environments for MR

- Absolutely real video displays such as computer monitors onto which software generated graphics or images are digitally overlaid.

- Video displays using immersive head-mounted displays (HMDs) but similar to monitors, the computer generated images are electronically or digitally overlaid.

- Head-mounted-displays with a see-through capability. These are partially immersive HMDs and the computer generated scenes or graphics are optically superimposed, onto real-world scenes.

- Head-mounted-displays without see-through capabilities, but the real, immediate outside world is displayed on the screen using video captured through camera(s). This displayed video or environment has to correspond orthoscopically with the outside real world. It creates a video see-through corresponding to an optical/real see-through.

- Fully graphic display environments to which video reality is added; these kind of displays are closer to Augmented Virtuality than Augmented Reality. These displays can be completely immersive, partially immersive or otherwise.

- Fully graphic environments that have real, physical objects interfacing with the computer generated virtual environment. These are partially immersive environments. A fully virtual room where the user’s physical hand can be used to grab a virtual doorknob, is an example.

Display Devices that are used for Mixed Reality Display

Monitors: Traditional computer monitors or other display devices such as TV etc.

Hand-held devices: These include mobile phones and tablets with camera. The real scene is captured through the camera and the virtual scene is added dynamically to it.

Head-mounted displays: These are display devices that can be mounted onto the head of the user with the display hovering in front of the eyes. These devices deploy sensors for six degrees of freedom that monitor and allow the system to align virtual information to the physical world and adjust accordingly with the user’s head movements. All the VR headsets and Microsoft Hololens are examples.

Eye-glasses: These devices are worn like usual glasses. The glasses may contain cameras to intercept the real world view and display the augmented view to the eye pieces. In some devices, the AR imagery is projected through or reflected off the lens pieces of the glass. Google Glass and the like are examples of such displays.

Heads-up-Display: The display is at the eye-level and user need not move the gaze to catch the information. Information projected on the windshields or cars and flights is an example.

Contact-lens: In development; will include ICs, LEDs, and antenna for wireless communication.

Virtual retina display: With this technology, a display is scanned directly onto the retina of a viewer’s eye.

Spatial Augmented Reality: SAR uses multiple projectors to display virtual or graphical information onto real world objects. In SAR, the display is not attached to a single user. Therefore, it scales up to groups of users, thus allowing collocated collaboration between users.

Trackers used to define the Mixed Reality scene

Digital cameras and other optical sensors: Used to capture video and other optical information of the real-scene.

Accelerometers: Used to compute the movement, speed and acceleration of the device in relation to other objects in the scene.

GPS: Used to identify the geo-position of the device that can in turn be used to provide location specific inputs to the scene.

Gyroscopes: Used to measure and maintain the orientation and angular velocity of the device.

Solid-State compasses: Used to compute the direction of the device.

RFID or Radio-Frequency Identification: Works by attaching tags to objects in the scene, which can be read by the device using radio-waves. RFIDs can be read even if the tag is not in eyesight and is several feet away.

Other Wireless Sensors are also used for tracking devices in the real/virtual space.

Techniques used for Processing Scenes and Representation

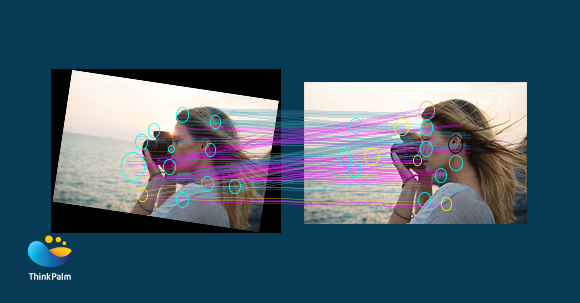

Image Registration

Registration is the process which makes pixels in two images precisely coincide to the same points in the scene. The two images can be images from different sensors at the same time (stereo imaging), or same sensor at different times (remote sensing). In an augmented reality platform, the two images might mean the real environment and the virtual environment. This registration is required so that the images can be combined to improve information extraction.

Image registration uses different methods of Computer Vision which deals with methods for making the computers achieve high-level understanding of digital information like images or videos. Computer Vision methods related to video tracking are most useful in Mixed Reality environments.

Video tracking is the process of locating moving object(s) caught in a camera over time. The objective is to map target objects on different video frames. To perform this, there are algorithms that analyse sequential video frames, studies the movement of target object(s) and outputs the same. Two main parts of a visual tracking system are target representation and localization (identifying the object and finding its location), as well as filtering and data association (incorporating prior knowledge of the scene, dealing with dynamics of the object and evaluating different hypotheses).

Common target representation and localization algorithms are:

- Kernel-based tracking – A localization procedure based on the maximization of a similarity measure (real-valued function that quantifies the similarity between two objects). This works iteratively. This method is also called mean-shift tracking.

- Contour tracking – These methods evolve an initial contour from the previous frame to its new position in the next frame. It also work iteratively from frame to frame. This is a method for deducing object boundaries. eg. Condensation Algorithm

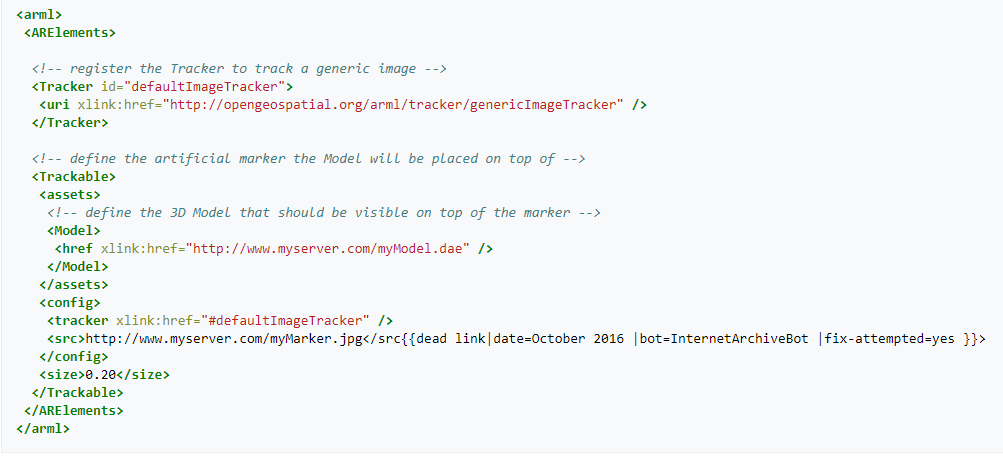

Augmented Reality Mark-up Language (ARML)

The ARML is a data standard that is used to describe and define augmented reality (AR) scenes and their interactions with the real world scenes. ARML is developed by a dedicated working group for ARML 2.0 Standards. ARML is within the OGC (Open Geospatial Consortium). It contains XML grammar to describe the location and appearance of virtual entities in the AR scene. It also contains ECMAScript bindings, which is a scripting language specification to allow access to the properties of the virtual items dynamically, as well as for event handling.

Since the ARML is built on an object model that is generic, it allows serialization in several languages. As of now, ARML defines XML serialization and JSON serialization for the ECMAScript bindings. The ARML object model consists of the following concepts:

Features: This details and describes the physical object that should be augmented to the real scene. The object is described by a set of metadata like ID, name, description etc. A Feature has one or more Anchors.

Visual Assets: This describes the appearance of the virtual objects in the augmented scene. Visual Assets that can be described include plain text, images, HTML content and 3D models. They can be oriented and scaled.

Anchors: An Anchor describes the location of the physical object in the real world. Four different Anchor types are Geometries, Trackables, RelativeTo and ScreenAnchor.

Microsoft Hololens

HoloLens is essentially a holographic computer, built into a headset, that lets you see, hear, and interact with holograms within an environment such as a living room or an office space. Hololens is a windows 10 PC by itself unlike other Mixed Reality devices like Google glass which are mere peripheral or auxiliary device that need to be connected to another processing device wirelessly. Hololens contains high-definition lenses and spatial sound technology to create that immersive, interactive holographic experience.

Input for a Hololens can be received via gaze, gestures, voice, gamepad and motion controllers. It also receives perception and spatial features like coordinates, spatial sound and spatial mapping. All mixed reality devices, including Hololens use the inputs already available with Windows, including mouse, keyboard, gamepads, and more. With HoloLens, hardware accessories are connected to the device via Bluetooth.

Developing for Mixed Reality

Windows 10 OS is built from bottom-up to be compatible with mixed-reality devices. The apps developed for Windows 10 are therefore inter-compatible with multiple devices, including Hololens and other immersive headsets. The environments used to develop for MR devices will depend on the type of app we want to build.

For 2D apps, we can use any tool that are used for developing Universal Windows Apps suited for all Windows environments (Windows Phone, PC, tablets etc.). These apps will be experienced as 2D projections and can work across multiple device types.

But the Immersive and holographic apps need tools designed to take advantage of the Windows Mixed Reality APIs. Visual Studio can take help of 3D development tools like Unity 3D for building such apps. If interested in building our own engine, we can use DirectX and other Windows APIs.

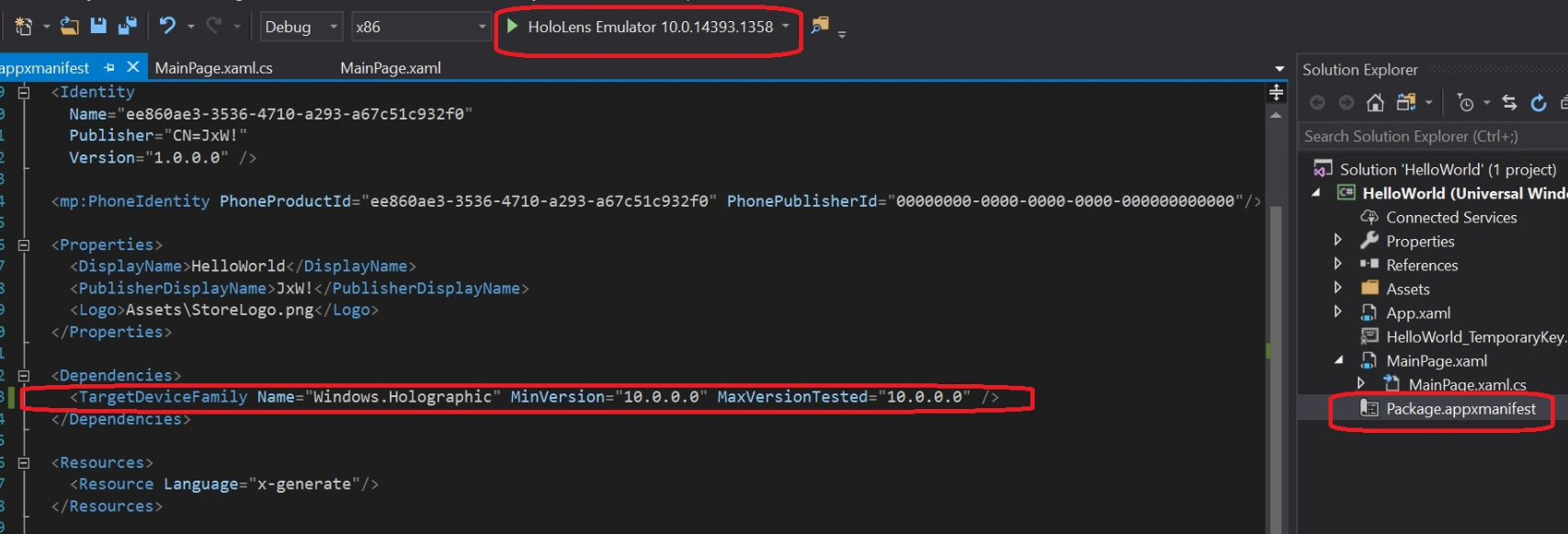

Universal Windows Platform apps exported from Unity will run on any Windows 10 device. But for Hololens, we should take advantage of features that are only available on HoloLens. To achieve this, we need to set the TargetDeviceFamily to “Windows.Holographic” in the Package.appxmanifest file in Visual Studio. Solution thus build, can be run on HoloLens Emulator.

The gaze is how focus is applied on holograms. It is the centre of the field of view when a user looks through the HoloLens, and is essentially a mouse cursor. This cursor could be custom designed for your app. HoloLens uses the position and orientation of your user’s head, not their eyes, to determine their gaze vector. Once the object is targeted with gaze, gestures can be used for actual interaction. Most common gesture is the “Tap”, this works like mouse left click. “Tap and Hold” can be used to move objects in 3D space. Events can be fired using custom voice commands also. RayCast, GestureRecognizer, KeywordRecognizer are some of the objects and OnSelect, OnPhraseRecognized, OnCollisionEnter, OnCollisionStay and OnCollisionExit are some of the useful event handlers that can be used in the development environments to capture these interactions.

Why Discuss Now?

It is rather impossible to justify the topic into a brief blog, but the idea is to open the doors into the possibilities and development scope of Mixed Reality environment and to begin conversations on the same. Mixed Reality has immense scope in terms of its application, including and not limited to fields like Literature, Archaeology, Visual art, commerce, architecture, education, medical, industrial design, flight training, military, emergency management, video games and beyond.

For software developers and other stakeholders developing traditional software for desktop, mobile and corporate environments, creating apps and experiences for HoloLens and similar devices is a step into the unknown. The way we think about experiences in a 3D space will challenge and change our traditional understanding of software or application development. The whole development environment is set to change immediately, if not sooner.