Amidst project deadlines, status review meetings and proposal presentation preparations on a typical Monday morning, a new Email window popped up on my screen bearing the subject line “DevOps Initiative at ThinkPalm”. I was curious to find out more since DevOps was the latest buzzword in the business circles lately, but all I could grasp was that it was one of the new age practices which had seemingly surpassed the waterfall and agile models of product development and delivery. A week later another Email followed announcing that we were scheduled to attend a DevOps Training session by an external SME on the subject.

As a traditional tester coming from, what seems like the age-old, “Waterfall model” days, I felt pretty ancient when exposed to the DevOps world. There were references of “Continuous Integration”, “Continuous Deployment” et al and a truck load of tools to use for each phase…or rather, for each process. There didn’t seem to be any “phases” in DevOps. All processes seemed to run together like clockwork!

Before I could get used to the DevOps jargons, our client manager, who apparently was much more DevOps savvy than me had accepted and signed on the dotted line for the DevOps implementation in our project – the first for CBU.

It took me some time to get out of the Waterfall model comfort zone, bypass the Agile model and dive into the DevOps Practice.

I seemed to have had a mental block that DevOps was easier to implement in the enterprise world for hosting websites and for application programming and deployment. But my practice of it in the Telecom domain cleared up the air for me. The DevOps world is a lot less hazy to navigate in now and I seem to be ready to share a piece of my knowledge with the world. So, here goes…

What is DevOps and how/why did it develop?

DevOps is a culture. It’s not a tool, process or a phase. It is a practice and a cultural shift which needs to be lived in, in order to bring about a radical transformation in the way software/products are developed, tested and deployed.

A few years ago the waterfall practice of methodical planning, elaborate designs, reviews and re-reviews of millions of lines of code, months of implementation and weeks of slow and manual testing and a final rush to delivery with an over-weight release note was scoffed at by the self-proclaimed Agile geeks who promised a faster, more efficient and cost-effective way of deploying solutions and products. However, the Agile process only seemed to integrate the Development and QA, but Operations seemed to be forgotten which led to a huge pile up of backlogs for the Operations team, which failed to push the product releases as fast as it was being developed.

Then, DevOps made its grand entry with sufficient homework of the drawbacks of the previous processes and with a simple mantra – Close integration and interaction of 4 basic and all-pervading blocks of any business – Development, QA, Operations and Business Users. No one seemed to be left behind.

It is a convergence of “Continuous” Processes – Continuous Integration, Continuous Delivery, Continuous Optimization, Continuous Monitoring and Continuous Deployment PLUS automation of each process with relevant tools.

Summing it up, DevOps is a convergence of people, processes and tools to enable adaptive IT and business agility.

Ok. Understood. All very impressive! But how does it work in the Telecom/Datacom Industry? I understand the need to tightly tie together development and QA – that’s continuous integration. I also understand the need to tightly tie together development, QA and operations – that’s continuous delivery. But in the telecom/datacom world, which involved physical boxes that cost millions of bucks deployed in locations several miles away from its manufacturer, I could not see where the Continuous Deployment came into picture. The answer lay in the opening lines of the introduction to DevOps that we had all been exposed to – “DevOps is a mindset/culture and not a process”. Just the way, the telecom industries had embraced and scaled Agile to their needs, we would scale and customize DevOps to our needs as well! That answered, I set out to implement our first DevOps practice in the Communications BU of ThinkPalm, with the expert help from a bunch of DevOps Architects here at ThinkPalm.

Why and how we built our first DevOps setup for CBU?

The Client: The client is a leading telecom equipment manufacturer in the US

The Problem Statement: Lack of integration testing and lengthy test cycle led to build failures and defect detection very late into the product development life cycle, thus leading to expensive bug fixes and delayed delivery.

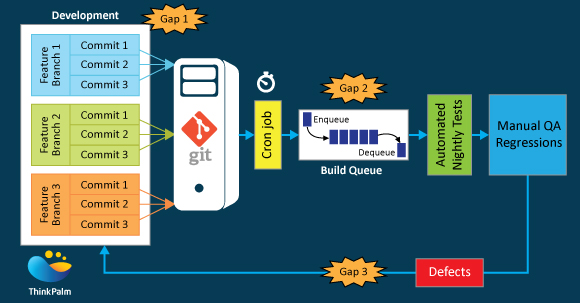

- The developers would perform a commit of code changes to various Feature Branches or the master/trunk. There would be multiple commits across various time zones into the main Git server.

- At a predefined time (once per 24 hours), a cron job would pick up a pre-defined branch to perform a build.

- This build would then be queued for automated nightly test runs.

- Multiple stable automated nightly runs leads to a “Release Candidate(RC)” being built.

- The Release Candidate would be picked up by manual QA for regression runs.

As depicted in the above figure, the above process contained gaps in terms of testing

Gap 1 :

- Multiple commits before a build led to difficulty in tracing back build failures to specific commits.

- Absence of integration testing before commits.

Gap 2 :

- Multiple builds queued for testing, but only one build tested per night.

- Not all branches (and as a result not all commits) could be tested due to manual changes in priorities.

Gap 3 :

- Cascading effect of Gap 1 and Gap 2 – Builds reach manual QA much later.

- Defects, sometimes release blockers, are reported during regression cycle giving less time for development to fix and re-test.

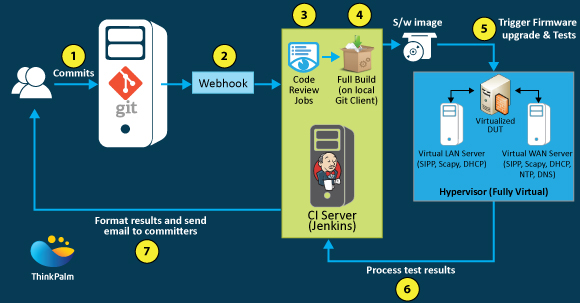

In order to fill some of the above gaps, the Continuous Integration process was introduced. The goal for this project was to create a Jenkins test suite, integrated into client’s GIT server, that can initiate a test-suite run for each commit.

- Developers perform a commit of their code changes into the Git server for various branches.

- Post commit webhook to Bitbucket triggers build pipeline on Jenkins for the specific branch after every commit.

- Pre-build tests like Doxygen, code review tests and code analysis tests are run.

- Full Build is performed to build a binary image on the local Git client.

- Connect to the test server using SSH to trigger functionality tests.

- The binary image is deployed on the DUT.

- Automated tests are executed on the DUT.

- Results are captured and processed by Jenkins.

- Test reports are formatted and Emailed automatically to the committer along with commit information.

Other highlights:

- Completely virtual test-bed with no cabling between devices in the test setup [implemented using Virtual Box].

- Automated branch specific build process after every developer commit using a webhook in Bitbucket for Jenkins.

- Multi-branch support.

- Queuing of builds if a commit occurs while a build is being tested.

- Ability for developers to submit private builds for testing on the CI setup.

As depicted above a Continuous Integration of the following processes was achieved :

- Development

- Pre-build testing

- Build process

- Deployment of the build on the DUT and

- Automated sanity & integration testing

This has led to stable, sanity tested builds being submitted to QA for regressions. Early detection of blocker issues gave development team enough time to fix defects thus leading to faster release and deployment of software to the end customer.

As for me – it has been a refreshing experience to realize that DevOps can be adopted in any domain and that DevOps need not be the DO ALL and END ALL of project execution. In a communications world, it perhaps can never be a complete replacement of existing processes. However, it has all traits of being an efficient complement to the existing processes which would result in faster and better quality deliverables.

To learn more about our DevOps services, check out https://thinkpalm.com/JP/services/devops/